For several years, DNS-OARC has been collecting DNS query data “from busy and interesting DNS name servers” as part of an annual “Day-in-the-Life” (DITL) effort (an effort originated by CAIDA in 2002) that I discussed in the first blog post in this series. DNS-OARC currently offers eight such data sets, covering the queries to many but not all of the 13 DNS root servers (and some non-root data) over a two-day period or longer each year from 2006 to present. With tens of billions of queries, the data sets provide researchers with a broad base of information about how the world is interacting with the global DNS as seen from the perspective of root and other name server operators.

In order for second-level domain (SLD) blocking to mitigate the risk of name collisions for a given gTLD, it must be the case that the SLDs associated with at-risk queries occur with sufficient frequency and geographical distribution to be captured in the DITL data sets with high probability. Because it is a purely quantitative countermeasure, based only on the occurrence of a query, not the context around it, SLD blocking does not offer a model for distinguishing at-risk queries from queries that are not at risk. Consequently, SLD blocking must make a stronger assumption to be effective: that any queries involving a given SLD occur with sufficient frequency and geographical distribution to be captured with high probability.

Put another way, the DITL data set – limited in time to an annual two-day period and in space to the name servers that participate in the DITL study – offers only a sample of the queries from installed systems, not statistically significant evidence of their behavior and of which at-risk queries are actually occurring.

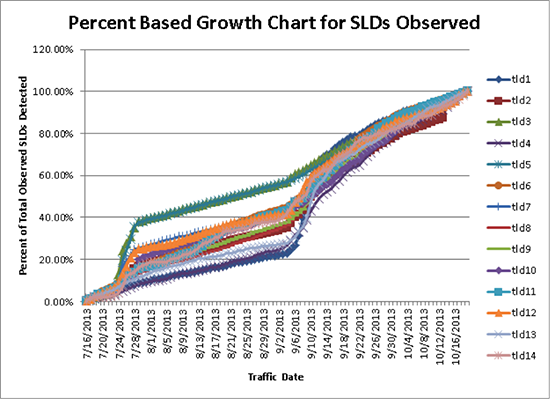

A concrete example will illustrate the point. Continuing the observations and analysis started in New gTLD Security and Stability Considerations and New gTLD Security, Stability, Resiliency Update: Exploratory Consumer Impact Analysis, Verisign Labs analyzed the SLDs in queries for 14 applied-for gTLDs at the A and J root servers during the period from July 16 to October 19, 2013. The 14 applied-for gTLDs were chosen arbitrarily as a sample set with a range of query volumes, not based on any assumptions about their SLD activity.

To be consistent with the SLD blocking proposal, the research team excluded from further analysis any SLD that did not consist only of alphanumeric characters and dashes, and any that appeared to consist of 10 random characters (the so-called “Chrome random strings”).

Verisign Labs then recorded the cumulative number of SLDs detected as of each day of the period, starting with 0 and leading up to the total number of SLDs observed for the entire period. To normalize across the different TLDs, the cumulative number per day was then divided by the total for the period to produce a cumulative percentage, starting with 0% and ending with 100%. The results are shown below.

The cumulative percentage grows gradually over the three-month period, with no obvious slowdown at the end; new SLDs continue to be observed each day at a similar rate. This is the case for all of the gTLDs measured. A sub-period as short as the one in the DITL data sets would not capture the full set of SLDs, even including the data from other root servers.

An apt analogy was made by one observer, “This would be like you deciding from a one-day weather report how to dress for the rest of the year. If it’s sunny, you’d go out without your umbrella every day, but that may not be a good idea.”1

Verisign Labs recently applied a similar, though preliminary, analysis of the queries seen at the A and J root servers for the recently delegated gTLDs whose SLD blocking lists were published on October 29:

.camera, .clothing, .equipment, .guru, .holdings, .lighting, .singles, .ventures, .voyage

The analysis indicates, as anticipated, that installed systems are sending many queries to the root server system that include SLDs not on the blocking lists.

However, one example is particularly illuminating.

In the second technical report, Verisign Labs identified the WPAD and ISATAP query types as potential risk vectors. These are not the only types of queries that could put an installed system at risk if the behavior of the global DNS were to change, but they are important ones because of the widespread use of the WPAD and ISATAP protocols in network configuration.

In data collected at the A and J root servers between July and October 2013, Verisign Labs observed 918 WPAD queries and 403 ISATAP queries from one third-level domain within one of the recently delegated gTLDs. This third-level domain was within an SLD not on the blocking list.

Because the SLD is not on the blocking list, it is possible that the SLD could be registered within the gTLD, changing the behavior of the global DNS for the installed system that generated these queries, with unpredictable consequences. In addition, as noted, many other SLDs not on the blocking lists occur in the queries to the root server system. Changes to the global DNS for those SLDs could likewise impact installed systems.

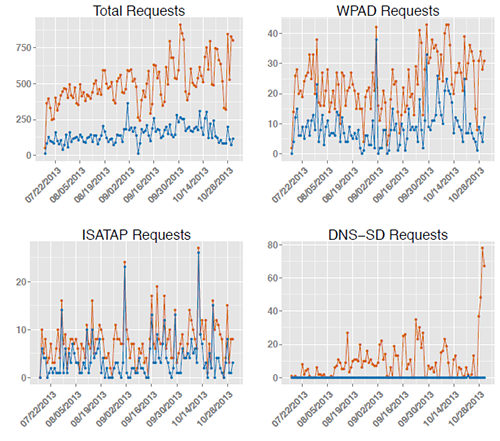

For reference, the traffic volume that Verisign Labs measured at the A and J root servers for the gTLD is visualized below. The top line of each graph shows the total number of queries measured during the period (excluding, as in the previous analysis, queries for domain names that did not consist only of alphanumeric characters and dashes, and the so-called “Chrome random strings”). The bottom line shows what the total number would have been if queries with SLDs on the blocking list were also excluded.

The SLD mentioned above is the primary source for the unmitigated WPAD and ISATAP requests. Many other SLDs contribute to the total requests. Clearly, a significant number of potentially at-risk queries would remain if blocking were implemented.

Until more detailed analysis has been done for the queries and the installed systems that generated them, it is not possible to draw a full conclusion on the impact on installed systems if the response to those queries were to change as a result of the registration of an SLD in the new gTLDs. However, the evidence at this point underscores the argument that the list of SLDs observed in the DITL data is an inadequate basis for determining which queries may put installed systems at risk.

Additional posts in this series:

- Part 1 of 4 – Introduction: ICANN’s Alternative Path to Delegation

- Part 3 of 4 – Name Collision Mitigation Requires Qualitative Analysis

- Part 4 of 4 – Conclusion: SLD Blocking Is Too Risky without TLD Rollback

1ICANN Scraps Name Collision Plan, Reducing Delay for Hundreds of New gTLDs. Washington Internet Daily. Vol. 14, no. 197, October 10, 2013.